AI and the underwater astronaut

A recent conversation about prompt engineering led to the inevitable impressionist rendering of an astronaut eating a burger underwater.

Read moreAutomation in IT operations enable agility, resilience, and operational excellence, paving the way for organizations to adapt swiftly to changing environments, deliver superior services, and achieve sustainable success in today's dynamic digital landscape.

Next-generation application management fueled by AIOps is revolutionizing how organizations monitor performance, modernize applications, and manage the entire application lifecycle.

AIOps and analytics foster a culture of continuous improvement by providing organizations with actionable intelligence to optimize workflows, enhance service quality, and align IT operations with business goals.

Artificial Intelligence (AI) has rapidly transitioned from a buzzword to a game-changer across industries. From healthcare to finance, retail to manufacturing, AI’s integration has redefined how businesses operate, innovate, and engage with customers. With 70% of companies now adopting at least one type of AI technology, a report by MarketsandMarkets™ estimates that the global AI market will reach over $190 billion by 2025. This massive growth signals a fundamental shift in how industries harness the power of automation, data analysis, and predictive analytics to drive efficiency and growth.

However, as the popularity of AI soars, businesses face an increasingly complex challenge—ensuring that their AI systems comply with legal, ethical, and regulatory standards. In a rapidly evolving landscape, staying ahead of compliance requirements can be daunting. In fact, research shows that nearly 60% of organizations struggle to maintain adequate governance over their AI technologies, with many encountering regulatory uncertainties that vary by region and industry. Balancing innovation with adherence to legal and ethical norms is no small feat, making AI compliance one of the most pressing challenges businesses must address in this transformative era.

This blog explores the key aspects of AI compliance, the challenges businesses face, and best practices to maintain artificial intelligence regulatory compliance while fostering innovation.

A recent survey found that 48% of M&A professionals are now using AI in their due diligence processes, a substantial increase from just 20% in 2018, highlighting the growing recognition of AI’s potential to transform M&A practices.

AI compliance refers to the adherence of AI systems to regulatory standards, ethical guidelines, and industry best practices. These regulations aim to mitigate risks related to bias, transparency, data privacy, and accountability while ensuring AI-driven decisions are fair and reliable.

Governments and industry bodies worldwide are introducing stringent regulations to govern AI applications, particularly in sectors like healthcare, finance, and cybersecurity. Organizations must stay informed about these evolving laws to prevent legal repercussions and reputational damage.

AI compliance also includes building trust with stakeholders and promoting transparency and fairness in decision-making. Robust cybersecurity measures and risk management strategies are essential components of artificial intelligence regulatory compliance.

The EU AI Act is one of the most comprehensive regulations aimed at classifying AI applications into risk categories—unacceptable, high, limited, and minimal risk. Businesses deploying high-risk AI systems must conduct rigorous assessments, maintain transparency, and implement human oversight mechanisms.

Although GDPR primarily governs data privacy, it plays a crucial role in artificial intelligence regulatory compliance, as AI systems process vast amounts of personal data. Companies must ensure AI-driven data processing aligns with GDPR’s principles of lawfulness, fairness, and transparency.

This framework outlines key principles for AI governance, focusing on data privacy, protection against algorithmic discrimination, and ensuring AI decision-making is explainable and accountable.

With iAM, every application becomes a node within a larger, interconnected system. The “intelligent” part isn’t merely about using AI to automate processes but about leveraging data insights to understand, predict, and improve the entire ecosystem’s functionality.

Consider the practical applications:

As businesses adopt AI at an unprecedented scale, understanding and addressing AI compliance is no longer optional—it’s a critical necessity. The consequences of failing to adhere to compliance standards can be severe. From financial penalties and lawsuits to damaged reputations and lost customer trust, the risks are substantial.

For instance, in 2021, the European Union’s General Data Protection Regulation (GDPR) imposed a €50 million fine on Google for violating privacy laws related to its AI-powered advertising systems. In the United States, recent state-level initiatives like the California Consumer Privacy Act (CCPA) and the Illinois Biometric Information Privacy Act (BIPA) have made it clear that businesses must be vigilant about data handling, especially when it comes to AI-driven platforms that rely on vast amounts of personal data. These regulations place heavy emphasis on transparency, accountability, and the protection of user privacy, making it imperative for businesses to ensure their AI systems are aligned with such laws.

Furthermore, AI technologies can have unintended consequences. For example, biased algorithms that unintentionally discriminate against certain groups of people can result in serious ethical and legal issues. AI’s opacity—often referred to as the “black-box problem”—can make it difficult for businesses to understand how decisions are made, complicating compliance with fairness and accountability standards. Thus, AI compliance isn’t just about legal adherence; it’s also about fostering trust with customers and ensuring that AI’s impact on society is positive and ethical.

While the importance of AI compliance is clear, ensuring adherence to these complex frameworks is easier said than done. Businesses face several key challenges:

AI regulations are still in their infancy, and they vary significantly across different countries and jurisdictions. For example, the European Union’s proposed Artificial Intelligence Act (AIA) aims to regulate high-risk AI applications, but similar laws are not yet in place in many other regions. This regulatory patchwork creates uncertainty for businesses that operate across borders, making it difficult to ensure compliance at a global scale.

There is no universal set of AI compliance standards that businesses can rely on. While frameworks like the OECD’s Principles on Artificial Intelligence provide guidance, they are non-binding and often vague. As AI technologies continue to evolve rapidly, regulators are struggling to keep up, leaving businesses to navigate a shifting landscape of emerging rules and guidelines.

One of the most persistent challenges in AI compliance is addressing the potential for algorithmic bias. AI systems are trained on large datasets, and if these datasets contain biased or unrepresentative data, the AI’s decision-making process will reflect those biases. For instance, AI in hiring or lending might inadvertently discriminate against women, minority groups, or other marginalized communities. Detecting and mitigating these biases is not only a technical challenge but a regulatory one as well, as regulators increasingly focus on ensuring fairness and preventing discrimination.

AI systems often require vast amounts of personal and sensitive data to function effectively, raising serious concerns about privacy and data security. Regulations like GDPR place stringent requirements on how data is collected, stored, and used, and any breaches can result in hefty fines. Ensuring that AI systems comply with data protection laws while still achieving their intended outcomes is a delicate balancing act.

AI technologies are complex, and ensuring compliance with evolving legal, ethical, and technical standards requires specialized knowledge. Many businesses, particularly small and medium-sized enterprises (SMEs), may lack the necessary in-house expertise to navigate these complexities, making compliance a daunting task.

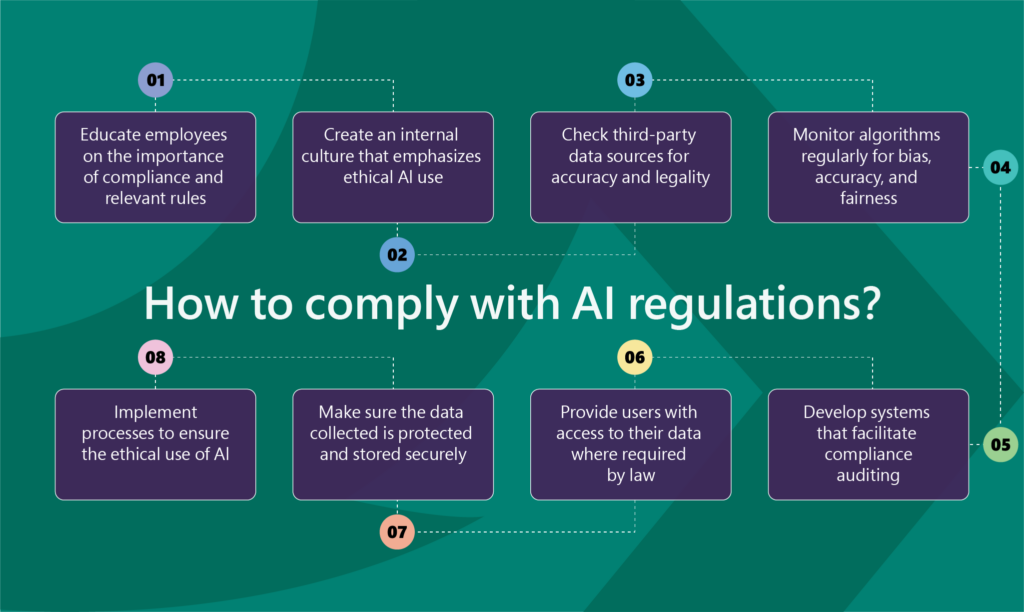

To maintain robust artificial intelligence regulatory compliance, organizations should implement the following best practices:

Regularly assess AI systems for bias, fairness, and compliance with current regulations. Establish a compliance framework to document and track AI-related risks.

Develop AI models that provide clear explanations for their decisions. Use explainable AI (XAI) techniques to enhance transparency and build trust with users.

Ensure AI systems comply with global data privacy laws by implementing robust data governance policies. Encrypt sensitive data, apply anonymization techniques, and obtain user consent where necessary.

Form an internal committee to oversee AI ethics and compliance. This team should include legal experts, data scientists, and ethicists to ensure responsible AI deployment.

Monitor global AI regulations and adjust compliance strategies accordingly. Engage with industry experts and participate in AI governance forums to stay ahead of legal developments.

Quinnox offers a comprehensive suite of AI and data services designed to help organizations navigate the complexities of AI compliance. Key offerings include:

Our AI governance frameworks ensure ethical standards, regulatory compliance, security, and transparency in AI initiatives. Through tailored assessments and best practices, they help establish robust governance mechanisms to monitor performance, manage risks, safeguard systems, and build trust. By aligning governance with the enterprise vision, Quinnox enables responsible and secure AI adoption for long-term success.

Our AI-powered compliance services assist organizations in meeting complex regulatory requirements, minimizing risks, and adapting to evolving standards. These services include automating Know Your Customer (KYC) and Anti-Money Laundering (AML) processes, as well as ensuring accurate regulatory reporting.

To enhance privacy and compliance, we help enterprises with pre-configured synthetic data generation capabilities integrated into our Quinnox AI (QAI) Studio– a one-stop AI innovation hub designed to give wings to your AI dreams by transforming your AI ideas into prototypes in days, not months. This approach ensures compliance with regulations like GDPR and CCPA by eliminating real user information, enabling secure AI model training.

Do you need help navigating the evolving regulatory landscape while enabling responsible, secure, and ethical AI adoption? If yes, then reach our highly experienced and skilled AI Squad to discuss your business use case for tailored AI solutions.

AI compliance refers to ensuring that artificial intelligence systems adhere to legal, ethical, and regulatory standards. It’s crucial for businesses to comply to avoid legal repercussions, protect user privacy, prevent bias, and maintain transparency in AI-driven decision-making. Non-compliance can result in fines, lawsuits, and reputational damage.

Key regulations include the European Union’s AI Act, which categorizes AI systems by risk level, the General Data Protection Regulation (GDPR), which governs data privacy, and the United States AI Bill of Rights, which focuses on privacy and preventing algorithmic discrimination.

Some key challenges include navigating rapidly evolving regulations, dealing with algorithmic bias, ensuring data privacy and security, and lacking the necessary in-house expertise to comply with complex legal and ethical standards.

Best practices include conducting regular AI audits, implementing transparency and explainability measures, strengthening data governance policies, establishing an AI ethics committee, and staying updated on regulatory changes.

Quinnox offers AI governance frameworks, AI-powered compliance services, and synthetic data generation to help businesses navigate regulatory requirements. These services ensure responsible AI adoption, minimize risks, and support compliance with data privacy regulations like GDPR and CCPA.

A recent conversation about prompt engineering led to the inevitable impressionist rendering of an astronaut eating a burger underwater.

Read moreAI can improve the efficiency and effectiveness of chaos engineering. AI algorithms help identify potential false positives

Read moreThe potential of AI analytics to optimize your business operations and drive innovation for seamless customer experience with data-driven solutions

Read moreGet in touch with Quinnox Inc to understand how we can accelerate success for you.